With AI tools, anyone can do miracles with digital images. In the wrong hands, miracles can turn into black magic. This is why and how we strive to prevent it.

AI-driven image generation and editing tools have revolutionized the way we create and manipulate images. At the same time, they also brought about serious concerns. Deepfakes are among them. And it’s not about deepfaked Tom Cruise or Barack Obama, but about the fakes that are less spectacular, not aimed to hype, and whose makers would prefer to pass unnoticed. Document forgery is one of them.

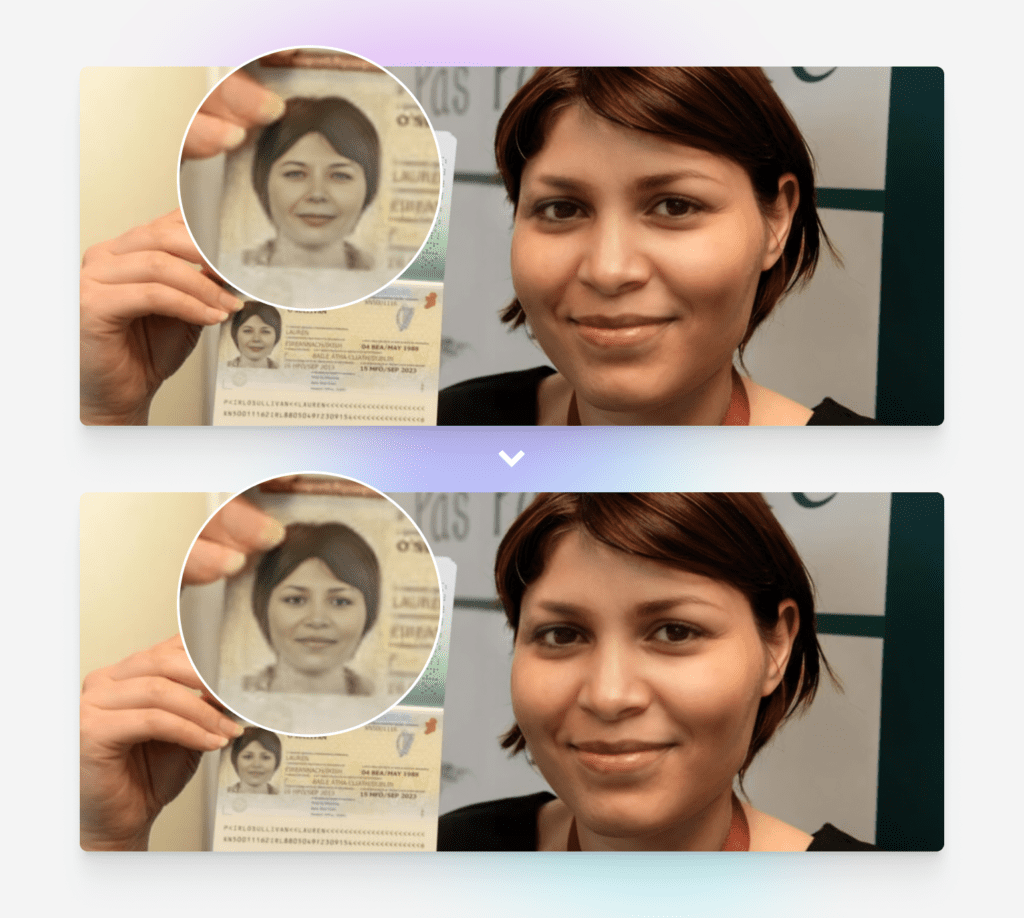

Imagine somebody has your ID. There is barely something that they can do with just this nowadays. Because companies dealing with sensitive information would ask you to provide a photo of yourself holding your ID document to verify your identity. For banks, cryptocurrency exchanges, and online payment processors, it’s a mandatory process called “ID verification” or “KYC” (Know Your Customer). Some would ask for such a photo to open an account. Some would ask it from time to time to make sure that you are genuinely who you claim to be.

But this is what malicious actors can potentially do with your ID or photo and AI tools:

This type of fraud is a huge thing. Some people do that to literally steal money or data from the initial document’s owner. Some just “prove” their strong passports to get access to international financial services. For example, a person from a financially limited country (like Afghanistan, Syria, etc.) wants to get approved as a citizen of a more open residence like the US, EU, Japan, or Singapore.

Recently we’ve got reports that our Face Swapper and similar services are promoted in DarkNet as a means of forging documents. Along with it, we spotted suspicious traffic. So, we had to stop it.

First, we collected images of documents and photos where the document is part of the picture. Then we identified rectangular contours and then more complex shapes.

The next step was to collect images that are may look like documents, but they are not (people holding newspapers, magazine covers, signs, phones, as well as stock photos with a similar structure).

In the first iteration, we tried a trivial neural network architecture. This approach showed 85% accuracy.

Fun fact: at first, AI falsely identified as documents memes and comic images, as well as some other images containing faces and text nearby. The same sometimes happened with stock photos because the person in the image was shown on a solid background.

Therefore, in the second iteration, we applied more complex models that defined corner cases and demonstrated 95% accuracy. Hence, now the system immediately throws out a warning saying that all images with IDs all prohibited and blocks this try.

All the other images are welcome. You can use Face Swapper to get super realistic face swapping with the highest resolution on the market to:

- Tune model faces for ads, blog posts, product photos

- Adapt your marketing visuals to a certain market

- Experiment with different looks and styles

- Replace faces in stock photos

- Anonymize people in photos

We encourage the ethical use of Face Swapper. All the legit uses are fine. But please do not fool people on Tinder.